mirror of

https://github.com/OMGeeky/gpt-pilot.git

synced 2026-01-06 11:19:33 +01:00

fix(gitignore): rm cache

This commit is contained in:

133

.github/CODE_OF_CONDUCT.md

vendored

Normal file

133

.github/CODE_OF_CONDUCT.md

vendored

Normal file

@@ -0,0 +1,133 @@

|

||||

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

We as members, contributors, and leaders pledge to make participation in our

|

||||

community a harassment-free experience for everyone, regardless of age, body

|

||||

size, visible or invisible disability, ethnicity, sex characteristics, gender

|

||||

identity and expression, level of experience, education, socio-economic status,

|

||||

nationality, personal appearance, race, religion, or sexual identity

|

||||

and orientation.

|

||||

|

||||

We pledge to act and interact in ways that contribute to an open, welcoming,

|

||||

diverse, inclusive, and healthy community.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to a positive environment for our

|

||||

community include:

|

||||

|

||||

* Demonstrating empathy and kindness toward other people

|

||||

* Being respectful of differing opinions, viewpoints, and experiences

|

||||

* Giving and gracefully accepting constructive feedback

|

||||

* Accepting responsibility and apologizing to those affected by our mistakes,

|

||||

and learning from the experience

|

||||

* Focusing on what is best not just for us as individuals, but for the

|

||||

overall community

|

||||

|

||||

Examples of unacceptable behavior include:

|

||||

|

||||

* The use of sexualized language or imagery, and sexual attention or

|

||||

advances of any kind

|

||||

* Trolling, insulting or derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or email

|

||||

address, without their explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Enforcement Responsibilities

|

||||

|

||||

Community leaders are responsible for clarifying and enforcing our standards of

|

||||

acceptable behavior and will take appropriate and fair corrective action in

|

||||

response to any behavior that they deem inappropriate, threatening, offensive,

|

||||

or harmful.

|

||||

|

||||

Community leaders have the right and responsibility to remove, edit, or reject

|

||||

comments, commits, code, wiki edits, issues, and other contributions that are

|

||||

not aligned to this Code of Conduct, and will communicate reasons for moderation

|

||||

decisions when appropriate.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies within all community spaces, and also applies when

|

||||

an individual is officially representing the community in public spaces.

|

||||

Examples of representing our community include using an official e-mail address,

|

||||

posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported to the community leaders responsible for enforcement at

|

||||

[INSERT CONTACT METHOD].

|

||||

All complaints will be reviewed and investigated promptly and fairly.

|

||||

|

||||

All community leaders are obligated to respect the privacy and security of the

|

||||

reporter of any incident.

|

||||

|

||||

## Enforcement Guidelines

|

||||

|

||||

Community leaders will follow these Community Impact Guidelines in determining

|

||||

the consequences for any action they deem in violation of this Code of Conduct:

|

||||

|

||||

### 1. Correction

|

||||

|

||||

**Community Impact**: Use of inappropriate language or other behavior deemed

|

||||

unprofessional or unwelcome in the community.

|

||||

|

||||

**Consequence**: A private, written warning from community leaders, providing

|

||||

clarity around the nature of the violation and an explanation of why the

|

||||

behavior was inappropriate. A public apology may be requested.

|

||||

|

||||

### 2. Warning

|

||||

|

||||

**Community Impact**: A violation through a single incident or series

|

||||

of actions.

|

||||

|

||||

**Consequence**: A warning with consequences for continued behavior. No

|

||||

interaction with the people involved, including unsolicited interaction with

|

||||

those enforcing the Code of Conduct, for a specified period of time. This

|

||||

includes avoiding interactions in community spaces as well as external channels

|

||||

like social media. Violating these terms may lead to a temporary or

|

||||

permanent ban.

|

||||

|

||||

### 3. Temporary Ban

|

||||

|

||||

**Community Impact**: A serious violation of community standards, including

|

||||

sustained inappropriate behavior.

|

||||

|

||||

**Consequence**: A temporary ban from any sort of interaction or public

|

||||

communication with the community for a specified period of time. No public or

|

||||

private interaction with the people involved, including unsolicited interaction

|

||||

with those enforcing the Code of Conduct, is allowed during this period.

|

||||

Violating these terms may lead to a permanent ban.

|

||||

|

||||

### 4. Permanent Ban

|

||||

|

||||

**Community Impact**: Demonstrating a pattern of violation of community

|

||||

standards, including sustained inappropriate behavior, harassment of an

|

||||

individual, or aggression toward or disparagement of classes of individuals.

|

||||

|

||||

**Consequence**: A permanent ban from any sort of public interaction within

|

||||

the community.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

||||

version 2.0, available at

|

||||

[https://www.contributor-covenant.org/version/2/0/code_of_conduct.html][v2.0].

|

||||

|

||||

Community Impact Guidelines were inspired by

|

||||

[Mozilla's code of conduct enforcement ladder][Mozilla CoC].

|

||||

|

||||

For answers to common questions about this code of conduct, see the FAQ at

|

||||

[https://www.contributor-covenant.org/faq][FAQ]. Translations are available

|

||||

at [https://www.contributor-covenant.org/translations][translations].

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

[v2.0]: https://www.contributor-covenant.org/version/2/0/code_of_conduct.html

|

||||

[Mozilla CoC]: https://github.com/mozilla/diversity

|

||||

[FAQ]: https://www.contributor-covenant.org/faq

|

||||

[translations]: https://www.contributor-covenant.org/translations

|

||||

0

.github/CONTRIBUTING.md

vendored

Normal file

0

.github/CONTRIBUTING.md

vendored

Normal file

176

.gitignore

vendored

Normal file

176

.gitignore

vendored

Normal file

@@ -0,0 +1,176 @@

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

cover/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

.pybuilder/

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

# For a library or package, you might want to ignore these files since the code is

|

||||

# intended to run in multiple environments; otherwise, check them in:

|

||||

# .python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# poetry

|

||||

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

||||

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

||||

# commonly ignored for libraries.

|

||||

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

||||

#poetry.lock

|

||||

|

||||

# pdm

|

||||

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

||||

#pdm.lock

|

||||

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

||||

# in version control.

|

||||

# https://pdm.fming.dev/#use-with-ide

|

||||

.pdm.toml

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# pytype static type analyzer

|

||||

.pytype/

|

||||

|

||||

# Cython debug symbols

|

||||

cython_debug/

|

||||

|

||||

# PyCharm

|

||||

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

||||

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

||||

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

||||

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

||||

.idea/

|

||||

|

||||

|

||||

# Logger

|

||||

/pilot/logger/debug.log

|

||||

|

||||

#sqlite

|

||||

/pilot/gpt-pilot

|

||||

|

||||

# workspace

|

||||

workspace

|

||||

|

||||

# pilot env

|

||||

pilot-env

|

||||

./pilot-env/**/*

|

||||

pilot-env/**/*

|

||||

pilot-env/bin

|

||||

21

LICENSE

Normal file

21

LICENSE

Normal file

@@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2023 Pythagora-io

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

163

README.md

Normal file

163

README.md

Normal file

@@ -0,0 +1,163 @@

|

||||

# 🧑✈️ GPT PILOT

|

||||

### GPT Pilot codes the entire app as you oversee the code being written

|

||||

|

||||

---

|

||||

|

||||

This is a research project to see how can GPT-4 be utilized to generate fully working, production-ready, apps. **The main idea is that AI can write most of the code for an app (maybe 95%) but for the rest 5%, a developer is and will be needed until we get full AGI**.

|

||||

|

||||

I've broken down the idea behind GPT Pilot and how it works in the following blog posts:

|

||||

|

||||

**[[Part 1/3] High-level concepts + GPT Pilot workflow until the coding part](https://blog.pythagora.ai/2023/08/23/430/)**

|

||||

|

||||

**_[Part 2/3] GPT Pilot coding workflow (COMING UP)_**

|

||||

|

||||

**_[Part 3/3] Other important concepts and future plans (COMING UP)_**

|

||||

|

||||

---

|

||||

|

||||

### **[👉 Examples of apps written by GPT Pilot 👈](#-examples)**

|

||||

|

||||

<br>

|

||||

|

||||

https://github.com/Pythagora-io/gpt-pilot/assets/10895136/0495631b-511e-451b-93d5-8a42acf22d3d

|

||||

|

||||

<br>

|

||||

|

||||

## Main pillars of GPT Pilot:

|

||||

1. For AI to create a fully working app, **a developer needs to be involved** in the process of app creation. They need to be able to change the code at any moment and GPT Pilot needs to continue working with those changes (eg. add an API key or fix an issue if an AI gets stuck) <br><br>

|

||||

2. **The app needs to be written step by step as a developer would write it** - Let's say you want to create a simple app and you know everything you need to code and have the entire architecture in your head. Even then, you won't code it out entirely, then run it for the first time and debug all the issues at once. Rather, you will implement something simple, like add routes, run it, see how it works, and then move on to the next task. This way, you can debug issues as they arise. The same should be in the case when AI codes. It will make mistakes for sure so in order for it to have an easier time debugging issues and for the developer to understand what is happening, the AI shouldn't just spit out the entire codebase at once. Rather, the app should be developed step by step just like a developer would code it - eg. setup routes, add database connection, etc. <br><br>

|

||||

3. **The approach needs to be scalable** so that AI can create a production ready app

|

||||

1. **Context rewinding** - for solving each development task, the context size of the first message to the LLM has to be relatively the same. For example, the context size of the first LLM message while implementing development task #5 has to be more or less the same as the first message while developing task #50. Because of this, the conversation needs to be rewound to the first message upon each task. [See the diagram here](https://blogpythagora.files.wordpress.com/2023/08/pythagora-product-development-frame-3-1.jpg?w=1714).

|

||||

2. **Recursive conversations** are LLM conversations that are set up in a way that they can be used “recursively”. For example, if GPT Pilot detects an error, it needs to debug it but let’s say that, during the debugging process, another error happens. Then, GPT Pilot needs to stop debugging the first issue, fix the second one, and then get back to fixing the first issue. This is a very important concept that, I believe, needs to work to make AI build large and scalable apps by itself. It works by rewinding the context and explaining each error in the recursion separately. Once the deepest level error is fixed, we move up in the recursion and continue fixing that error. We do this until the entire recursion is completed.

|

||||

3. **TDD (Test Driven Development)** - for GPT Pilot to be able to scale the codebase, it will need to be able to create new code without breaking previously written code. There is no better way to do this than working with TDD methodology. For each code that GPT Pilot writes, it needs to write tests that check if the code works as intended so that whenever new changes are made, all previous tests can be run.

|

||||

|

||||

The idea is that AI won't be able to (at least in the near future) create apps from scratch without the developer being involved. That's why we created an interactive tool that generates code but also requires the developer to check each step so that they can understand what's going on and so that the AI can have a better overview of the entire codebase.

|

||||

|

||||

Obviously, it still can't create any production-ready app but the general concept of how this could work is there.

|

||||

|

||||

# 🔌 Requirements

|

||||

|

||||

|

||||

- **Python**

|

||||

- **PostgreSQL** (optional, projects default is SQLite)

|

||||

- DB is needed for multiple reasons like continuing app development if you had to stop at any point or app crashed, going back to specific step so you can change some later steps in development, easier debugging, for future we will add functionality to update project (change some things in existing project or add new features to the project and so on)...

|

||||

|

||||

|

||||

# 🚦How to start using gpt-pilot?

|

||||

After you have Python and PostgreSQL installed, follow these steps:

|

||||

1. `git clone https://github.com/Pythagora-io/gpt-pilot.git` (clone the repo)

|

||||

2. `cd gpt-pilot`

|

||||

3. `python -m venv pilot-env` (create a virtual environment)

|

||||

4. `source pilot-env/bin/activate` (activate the virtual environment)

|

||||

5. `pip install -r requirements.txt` (install the dependencies)

|

||||

6. `cd pilot`

|

||||

7. `mv .env.example .env` (create the .env file)

|

||||

8. Add your environment (OpenAI/Azure), your API key and the SQLite/PostgreSQL database info to the `.env` file

|

||||

- to change from SQLite to PostgreSQL in your .env just set `DATABASE_TYPE=postgres`

|

||||

9. `python db_init.py` (initialize the database)

|

||||

10. `python main.py` (start GPT Pilot)

|

||||

|

||||

After, this, you can just follow the instructions in the terminal.

|

||||

|

||||

All generated code will be stored in the folder `workspace` inside the folder named after the app name you enter upon starting the pilot.

|

||||

|

||||

**IMPORTANT: To run GPT Pilot, you need to have PostgreSQL set up on your machine**

|

||||

<br>

|

||||

|

||||

# 🧑💻️ Other arguments

|

||||

- continue working on an existing app

|

||||

```bash

|

||||

python main.py app_id=<ID_OF_THE_APP>

|

||||

```

|

||||

|

||||

- continue working on an existing app from a specific step

|

||||

```bash

|

||||

python main.py app_id=<ID_OF_THE_APP> step=<STEP_FROM_CONST_COMMON>

|

||||

```

|

||||

|

||||

- continue working on an existing app from a specific development step

|

||||

```bash

|

||||

python main.py app_id=<ID_OF_THE_APP> skip_until_dev_step=<DEV_STEP>

|

||||

```

|

||||

This is basically the same as `step` but during the actual development process. If you want to play around with gpt-pilot, this is likely the flag you will often use.

|

||||

<br>

|

||||

- erase all development steps previously done and continue working on an existing app from start of development

|

||||

```bash

|

||||

python main.py app_id=<ID_OF_THE_APP> skip_until_dev_step=0

|

||||

```

|

||||

|

||||

# 🔎 Examples

|

||||

|

||||

Here are a couple of example apps GPT Pilot created by itself:

|

||||

|

||||

### Real-time chat app

|

||||

- 💬 Prompt: `A simple chat app with real time communication`

|

||||

- ▶️ [Video of the app creation process](https://youtu.be/bUj9DbMRYhA)

|

||||

- 💻️ [GitHub repo](https://github.com/Pythagora-io/gpt-pilot-chat-app-demo)

|

||||

|

||||

<p align="left">

|

||||

<img src="https://github.com/Pythagora-io/gpt-pilot/assets/10895136/85bc705c-be88-4ca1-9a3b-033700b97a22" alt="gpt-pilot demo chat app" width="500px"/>

|

||||

</p>

|

||||

|

||||

|

||||

### Markdown editor

|

||||

- 💬 Prompt: `Build a simple markdown editor using HTML, CSS, and JavaScript. Allow users to input markdown text and display the formatted output in real-time.`

|

||||

- ▶️ [Video of the app creation process](https://youtu.be/uZeA1iX9dgg)

|

||||

- 💻️ [GitHub repo](https://github.com/Pythagora-io/gpt-pilot-demo-markdown-editor.git)

|

||||

|

||||

<p align="left">

|

||||

<img src="https://github.com/Pythagora-io/gpt-pilot/assets/10895136/dbe1ccc3-b126-4df0-bddb-a524d6a386a8" alt="gpt-pilot demo markdown editor" width="500px"/>

|

||||

</p>

|

||||

|

||||

|

||||

### Timer app

|

||||

- 💬 Prompt: `Create a simple timer app using HTML, CSS, and JavaScript that allows users to set a countdown timer and receive an alert when the time is up.`

|

||||

- ▶️ [Video of the app creation process](https://youtu.be/CMN3W18zfiE)

|

||||

- 💻️ [GitHub repo](https://github.com/Pythagora-io/gpt-pilot-timer-app-demo)

|

||||

|

||||

<p align="left">

|

||||

<img src="https://github.com/Pythagora-io/gpt-pilot/assets/10895136/93bed40b-b769-4c8b-b16d-b80fb6fc73e0" alt="gpt-pilot demo markdown editor" width="500px"/>

|

||||

</p>

|

||||

|

||||

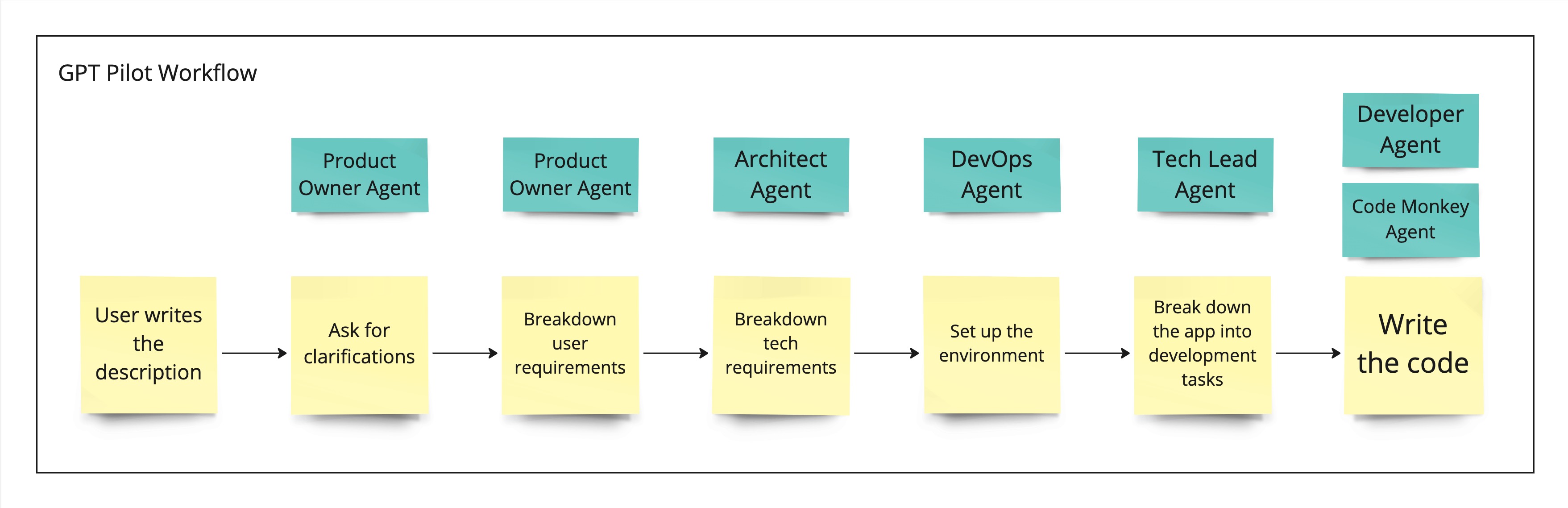

# 🏗 How GPT Pilot works?

|

||||

Here are the steps GPT Pilot takes to create an app:

|

||||

|

||||

|

||||

|

||||

1. You enter the app name and the description

|

||||

2. **Product Owner agent** asks a couple of questions to understand the requirements better

|

||||

3. **Product Owner agent** writes user stories and asks you if they are all correct (this helps it create code later on)

|

||||

4. **Architect agent** writes up technologies that will be used for the app

|

||||

5. **DevOps agent** checks if all technologies are installed on the machine and installs them if they are not

|

||||

6. **Tech Lead agent** writes up development tasks that Developer will need to implement. This is an important part because, for each step, Tech Lead needs to specify how the user (real world developer) can review if the task is done (eg. open localhost:3000 and do something)

|

||||

7. **Developer agent** takes each task and writes up what needs to be done to implement it. The description is in human readable form.

|

||||

8. Finally, **Code Monkey agent** takes the Developer's description and the currently implement file and implements the changes into it. We realized this works much better than giving it to Developer right away to implement changes.

|

||||

|

||||

|

||||

|

||||

<br>

|

||||

|

||||

# 🕴How's GPT Pilot different from _Smol developer_ and _GPT engineer_?

|

||||

- **Human developer is involved throughout the process** - I don't think that AI can (at least in the near future) create apps without a developer being involved. Also, I think it's hard for a developer to get into a big codebase and try debugging it. That's why my idea was for AI to develop the app step by step where each step is reviewed by the developer. If you want to change some code yourself, you can just change it and GPT Pilot will continue developing on top of those changes.

|

||||

<br><br>

|

||||

- **Continuous development loops** - The goal behind this project was to see how we can create recursive conversations with GPT so that it can debug any issue and implement any feature. For example, after the app is generated, you can always add more instructions about what you want to implement or debug. I wanted to see if this can be so flexible that, regardless of the app's size, it can just iterate and build bigger and bigger apps

|

||||

<br><br>

|

||||

- **Auto debugging** - when it detects an error, it debugs it by itself. I still haven't implemented writing automated tests which should make this fully autonomous but for now, you can input the error that's happening (eg. within a UI) and GPT Pilot will debug it from there. The plan is to make it write automated tests in Cypress as well so that it can test it by itself and debug without the developer's explanation.

|

||||

|

||||

# 🍻 Contributing

|

||||

If you are interested in contributing to GPT Pilot, I would be more than happy to have you on board but also help you get started. Feel free to ping [zvonimir@pythagora.ai](mailto:zvonimir@pythagora.ai) and I'll help you get started.

|

||||

|

||||

## 🔬️ Research

|

||||

Since this is a research project, there are many areas that need to be researched on both practical and theoretical levels. We're happy to hear how can the entire GPT Pilot concept be improved. For example, maybe it would work better if we structured functional requirements differently or maybe technical requirements need to be specified in a different way.

|

||||

|

||||

## 🖥 Development

|

||||

Other than the research, GPT Pilot needs to be debugged to work in different scenarios. For example, we realized that the quality of the code generated is very sensitive to the size of the development task. When the task is too broad, the code has too many bugs that are hard to fix but when the development task is too narrow, GPT also seems to struggle in getting the task implemented into the existing code.

|

||||

|

||||

# 🔗 Connect with us

|

||||

🌟 As an open source tool, it would mean the world to us if you starred the GPT-pilot repo 🌟

|

||||

|

||||

💬 Join [the Discord server](https://discord.gg/HaqXugmxr9) to get in touch.

|

||||

<br><br>

|

||||

<br><br>

|

||||

|

||||

<br><br>

|

||||

25

pilot/.env.example

Normal file

25

pilot/.env.example

Normal file

@@ -0,0 +1,25 @@

|

||||

# OPENAI or AZURE or OPENROUTER

|

||||

ENDPOINT=OPENAI

|

||||

|

||||

OPENAI_ENDPOINT=

|

||||

OPENAI_API_KEY=

|

||||

|

||||

AZURE_API_KEY=

|

||||

AZURE_ENDPOINT=

|

||||

|

||||

OPENROUTER_API_KEY=

|

||||

OPENROUTER_ENDPOINT=https://openrouter.ai/api/v1/chat/completions

|

||||

|

||||

# In case of Azure/OpenRouter endpoint, change this to your deployed model name

|

||||

MODEL_NAME=gpt-4

|

||||

# MODEL_NAME=openai/gpt-3.5-turbo-16k

|

||||

MAX_TOKENS=8192

|

||||

|

||||

# Database

|

||||

# DATABASE_TYPE=postgres

|

||||

|

||||

DB_NAME=gpt-pilot

|

||||

DB_HOST=

|

||||

DB_PORT=

|

||||

DB_USER=

|

||||

DB_PASSWORD=

|

||||

0

pilot/__init__.py

Normal file

0

pilot/__init__.py

Normal file

4

pilot/const/code_execution.py

Normal file

4

pilot/const/code_execution.py

Normal file

@@ -0,0 +1,4 @@

|

||||

MAX_COMMAND_DEBUG_TRIES = 3

|

||||

MIN_COMMAND_RUN_TIME = 2000

|

||||

MAX_COMMAND_RUN_TIME = 30000

|

||||

MAX_COMMAND_OUTPUT_LENGTH = 2000

|

||||

31

pilot/const/common.py

Normal file

31

pilot/const/common.py

Normal file

@@ -0,0 +1,31 @@

|

||||

APP_TYPES = ['Web App', 'Script', 'Mobile App (unavailable)', 'Chrome Extension (unavailable)']

|

||||

ROLES = {

|

||||

'product_owner': ['project_description', 'user_stories', 'user_tasks'],

|

||||

'architect': ['architecture'],

|

||||

'tech_lead': ['development_planning'],

|

||||

'full_stack_developer': ['create_scripts', 'coding'],

|

||||

'dev_ops': ['environment_setup'],

|

||||

}

|

||||

STEPS = [

|

||||

'project_description',

|

||||

'user_stories',

|

||||

'user_tasks',

|

||||

'architecture',

|

||||

'development_planning',

|

||||

'environment_setup',

|

||||

'coding'

|

||||

]

|

||||

|

||||

IGNORE_FOLDERS = [

|

||||

'.git',

|

||||

'.idea',

|

||||

'.vscode',

|

||||

'__pycache__',

|

||||

'node_modules',

|

||||

'package-lock.json',

|

||||

'venv',

|

||||

'dist',

|

||||

'build',

|

||||

]

|

||||

|

||||

PROMPT_DATA_TO_IGNORE = {'directory_tree', 'name'}

|

||||

47

pilot/const/convert_to_playground_convo.js

Normal file

47

pilot/const/convert_to_playground_convo.js

Normal file

@@ -0,0 +1,47 @@

|

||||

let messages = {{messages}}

|

||||

|

||||

function sleep(ms) {

|

||||

return new Promise(resolve => setTimeout(resolve, ms));

|

||||

}

|

||||

|

||||

async function fill_playground(messages) {

|

||||

let system_messages = messages.filter(msg => msg.role === 'system');

|

||||

if (system_messages.length > 0) {

|

||||

let system_message_textarea = document.querySelector('.chat-pg-instructions').querySelector('textarea');

|

||||

system_message_textarea.focus();

|

||||

system_message_textarea.value = '';

|

||||

document.execCommand("insertText", false, system_messages[0].content);

|

||||

await sleep(100);

|

||||

}

|

||||

|

||||

// Remove all previous messages

|

||||

let remove_buttons = document.querySelectorAll('.chat-message-remove-button');

|

||||

for (let j = 0; j < 10; j++) {

|

||||

for (let i = 0; i < remove_buttons.length; i++) {

|

||||

let clickEvent = new Event('click', {

|

||||

'bubbles': true,

|

||||

'cancelable': true

|

||||

});

|

||||

remove_buttons[i].dispatchEvent(clickEvent);

|

||||

}

|

||||

}

|

||||

|

||||

let other_messages = messages.filter(msg => msg.role !== 'system');

|

||||

|

||||

for (let i = 0; i < other_messages.length; i++) {

|

||||

document.querySelector('.add-message').click()

|

||||

await sleep(100);

|

||||

}

|

||||

|

||||

for (let i = 0; i < other_messages.length; i++) {

|

||||

let all_elements = document.querySelectorAll('.text-input-with-focus');

|

||||

let last_user_document = all_elements[i];

|

||||

|

||||

textarea_to_fill = last_user_document.querySelector('textarea');

|

||||

textarea_to_fill.focus();

|

||||

document.execCommand("insertText", false, other_messages[i].content);

|

||||

await sleep(100);

|

||||

}

|

||||

}

|

||||

|

||||

fill_playground(messages)

|

||||

550

pilot/const/function_calls.py

Normal file

550

pilot/const/function_calls.py

Normal file

@@ -0,0 +1,550 @@

|

||||

def process_user_stories(stories):

|

||||

return stories

|

||||

|

||||

|

||||

def process_user_tasks(tasks):

|

||||

return tasks

|

||||

|

||||

|

||||

def process_os_technologies(technologies):

|

||||

return technologies

|

||||

|

||||

|

||||

def run_commands(commands):

|

||||

return commands

|

||||

|

||||

|

||||

def return_files(files):

|

||||

# TODO get file

|

||||

return files

|

||||

|

||||

|

||||

def return_array_from_prompt(name_plural, name_singular, return_var_name):

|

||||

return {

|

||||

'name': f'process_{name_plural.replace(" ", "_")}',

|

||||

'description': f"Print the list of {name_plural} that are created.",

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

f"{return_var_name}": {

|

||||

"type": "array",

|

||||

"description": f"List of {name_plural} that are created in a list.",

|

||||

"items": {

|

||||

"type": "string",

|

||||

"description": f"{name_singular}"

|

||||

},

|

||||

},

|

||||

},

|

||||

"required": [return_var_name],

|

||||

},

|

||||

}

|

||||

|

||||

|

||||

def command_definition(description_command=f'A single command that needs to be executed.', description_timeout=f'Timeout in milliseconds that represent the approximate time this command takes to finish. If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`'):

|

||||

return {

|

||||

'type': 'object',

|

||||

'description': 'Command that needs to be run to complete the current task. This should be used only if the task is of a type "command".',

|

||||

'properties': {

|

||||

'command': {

|

||||

'type': 'string',

|

||||

'description': description_command,

|

||||

},

|

||||

'timeout': {

|

||||

'type': 'number',

|

||||

'description': description_timeout,

|

||||

}

|

||||

},

|

||||

'required': ['command', 'timeout'],

|

||||

}

|

||||

|

||||

|

||||

USER_STORIES = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('user stories', 'user story', 'stories')

|

||||

],

|

||||

'functions': {

|

||||

'process_user_stories': process_user_stories

|

||||

},

|

||||

}

|

||||

|

||||

USER_TASKS = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('user tasks', 'user task', 'tasks')

|

||||

],

|

||||

'functions': {

|

||||

'process_user_tasks': process_user_tasks

|

||||

},

|

||||

}

|

||||

|

||||

ARCHITECTURE = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('technologies', 'technology', 'technologies')

|

||||

],

|

||||

'functions': {

|

||||

'process_technologies': lambda technologies: technologies

|

||||

},

|

||||

}

|

||||

|

||||

FILTER_OS_TECHNOLOGIES = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('os specific technologies', 'os specific technology', 'technologies')

|

||||

],

|

||||

'functions': {

|

||||

'process_os_specific_technologies': process_os_technologies

|

||||

},

|

||||

}

|

||||

|

||||

INSTALL_TECH = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('os specific technologies', 'os specific technology', 'technologies')

|

||||

],

|

||||

'functions': {

|

||||

'process_os_specific_technologies': process_os_technologies

|

||||

},

|

||||

}

|

||||

|

||||

COMMANDS_TO_RUN = {

|

||||

'definitions': [

|

||||

return_array_from_prompt('commands', 'command', 'commands')

|

||||

],

|

||||

'functions': {

|

||||

'process_commands': run_commands

|

||||

},

|

||||

}

|

||||

|

||||

DEV_TASKS_BREAKDOWN = {

|

||||

'definitions': [

|

||||

{

|

||||

'name': 'break_down_development_task',

|

||||

'description': 'Breaks down the development task into smaller steps that need to be done to implement the entire task.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"tasks": {

|

||||

'type': 'array',

|

||||

'description': 'List of smaller development steps that need to be done to complete the entire task.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'description': 'A smaller development step that needs to be done to complete the entire task. Remember, if you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'enum': ['command', 'code_change', 'human_intervention'],

|

||||

'description': 'Type of the development step that needs to be done to complete the entire task.',

|

||||

},

|

||||

'command': command_definition(f'A single command that needs to be executed.', 'Timeout in milliseconds that represent the approximate time the command takes to finish. This should be used only if the task is of a type "command". If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. Remember, this is not in seconds but in milliseconds so likely it always needs to be greater than 1000.'),

|

||||

'code_change_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a the development step that needs to be done. This should be used only if the task is of a type "code_change" and it should thoroughly describe what needs to be done to implement the code change for a single file - it cannot include changes for multiple files.',

|

||||

},

|

||||

'human_intervention_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a task that requires a human to do.',

|

||||

},

|

||||

},

|

||||

'required': ['type'],

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['tasks'],

|

||||

},

|

||||

},

|

||||

],

|

||||

'functions': {

|

||||

'break_down_development_task': lambda tasks: tasks

|

||||

},

|

||||

}

|

||||

|

||||

IMPLEMENT_TASK = {

|

||||

'definitions': [

|

||||

{

|

||||

'name': 'parse_development_task',

|

||||

'description': 'Breaks down the development task into smaller steps that need to be done to implement the entire task.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"tasks": {

|

||||

'type': 'array',

|

||||

'description': 'List of smaller development steps that need to be done to complete the entire task.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'description': 'A smaller development step that needs to be done to complete the entire task. Remember, if you need to run a command that doesnt\'t finish by itself (eg. a command to run an If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'enum': ['command', 'code_change', 'human_intervention'],

|

||||

'description': 'Type of the development step that needs to be done to complete the entire task.',

|

||||

},

|

||||

'command': command_definition(),

|

||||

'code_change': {

|

||||

'type': 'object',

|

||||

'description': 'A code change that needs to be implemented. This should be used only if the task is of a type "code_change".',

|

||||

'properties': {

|

||||

'name': {

|

||||

'type': 'string',

|

||||

'description': 'Name of the file that needs to be implemented.',

|

||||

},

|

||||

'path': {

|

||||

'type': 'string',

|

||||

'description': 'Full path of the file with the file name that needs to be implemented.',

|

||||

},

|

||||

'content': {

|

||||

'type': 'string',

|

||||

'description': 'Full content of the file that needs to be implemented.',

|

||||

},

|

||||

},

|

||||

'required': ['name', 'path', 'content'],

|

||||

},

|

||||

'human_intervention_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a step in debugging this issue when there is a human intervention needed. This should be used only if the task is of a type "human_intervention".',

|

||||

},

|

||||

},

|

||||

'required': ['type'],

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['tasks'],

|

||||

},

|

||||

},

|

||||

],

|

||||

'functions': {

|

||||

'parse_development_task': lambda tasks: tasks

|

||||

},

|

||||

}

|

||||

|

||||

DEV_STEPS = {

|

||||

'definitions': [

|

||||

{

|

||||

'name': 'break_down_development_task',

|

||||

'description': 'Breaks down the development task into smaller steps that need to be done to implement the entire task.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"tasks": {

|

||||

'type': 'array',

|

||||

'description': 'List of development steps that need to be done to complete the entire task.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'description': 'Development step that needs to be done to complete the entire task.',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'description': 'Type of the development step that needs to be done to complete the entire task - it can be "command" or "code_change".',

|

||||

},

|

||||

'description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of the development step that needs to be done.',

|

||||

},

|

||||

},

|

||||

'required': ['type', 'description'],

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['tasks'],

|

||||

},

|

||||

},

|

||||

{

|

||||

'name': 'run_commands',

|

||||

'description': 'Run all commands in the given list. Each command needs to be a single command that can be executed.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"commands": {

|

||||

'type': 'array',

|

||||

'description': 'List of commands that need to be run to complete the currrent task. Each command cannot be anything other than a single CLI command that can be independetly run.',

|

||||

'items': {

|

||||

'type': 'string',

|

||||

'description': 'A single command that needs to be run to complete the current task.',

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['commands'],

|

||||

},

|

||||

},

|

||||

{

|

||||

'name': 'process_code_changes',

|

||||

'description': 'Implements all the code changes outlined in the description.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"code_change_description": {

|

||||

'type': 'string',

|

||||

'description': 'A detailed description of what needs to be done to implement all the code changes from the task.',

|

||||

}

|

||||

},

|

||||

"required": ['code_change_description'],

|

||||

},

|

||||

},

|

||||

{

|

||||

'name': 'get_files',

|

||||

'description': f'Returns development files that are currently implemented so that they can be analized and so that changes can be appropriatelly made.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'files': {

|

||||

'type': 'array',

|

||||

'description': f'List of files that need to be analized to implement the reqired changes.',

|

||||

'items': {

|

||||

'type': 'string',

|

||||

'description': f'A single file name that needs to be analized to implement the reqired changes. Remember, this is a file name with path relative to the project root. For example, if a file path is `{{project_root}}/models/model.py`, this value needs to be `models/model.py`.',

|

||||

}

|

||||

}

|

||||

},

|

||||

'required': ['files'],

|

||||

},

|

||||

}

|

||||

],

|

||||

'functions': {

|

||||

'break_down_development_task': lambda tasks: (tasks, 'more_tasks'),

|

||||

'run_commands': lambda commands: (commands, 'run_commands'),

|

||||

'process_code_changes': lambda code_change_description: (code_change_description, 'code_changes'),

|

||||

'get_files': return_files

|

||||

},

|

||||

}

|

||||

|

||||

CODE_CHANGES = {

|

||||

'definitions': [

|

||||

{

|

||||

'name': 'break_down_development_task',

|

||||

'description': 'Implements all the smaller tasks that need to be done to complete the entire development task.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"tasks": {

|

||||

'type': 'array',

|

||||

'description': 'List of smaller development steps that need to be done to complete the entire task.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'description': 'A smaller development step that needs to be done to complete the entire task. Remember, if you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'enum': ['command', 'code_change'],

|

||||

'description': 'Type of the development step that needs to be done to complete the entire task.',

|

||||

},

|

||||

'command': command_definition('Command that needs to be run to complete the current task. This should be used only if the task is of a type "command".', 'Timeout in milliseconds that represent the approximate time the command takes to finish. This should be used only if the task is of a type "command". If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. Remember, this is not in seconds but in milliseconds so likely it always needs to be greater than 1000.'),

|

||||

'code_change_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a the development step that needs to be done. This should be used only if the task is of a type "code_change" and it should thoroughly describe what needs to be done to implement the code change.',

|

||||

},

|

||||

},

|

||||

'required': ['type'],

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['tasks'],

|

||||

},

|

||||

}

|

||||

],

|

||||

'functions': {

|

||||

'break_down_development_task': lambda tasks: tasks,

|

||||

},

|

||||

}

|

||||

|

||||

DEVELOPMENT_PLAN = {

|

||||

'definitions': [{

|

||||

'name': 'implement_development_plan',

|

||||

'description': 'Implements the development plan.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"plan": {

|

||||

"type": "array",

|

||||

"description": 'List of development tasks that need to be done to implement the entire plan.',

|

||||

"items": {

|

||||

"type": "object",

|

||||

'description': 'Development task that needs to be done to implement the entire plan.',

|

||||

'properties': {

|

||||

'description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of the development task that needs to be done to implement the entire plan.',

|

||||

},

|

||||

'programmatic_goal': {

|

||||

'type': 'string',

|

||||

'description': 'programmatic goal that will determine if a task can be marked as done from a programmatic perspective (this will result in an automated test that is run before the task is sent to you for a review)',

|

||||

},

|

||||

'user_review_goal': {

|

||||

'type': 'string',

|

||||

'description': 'user-review goal that will determine if a task is done or not but from a user perspective since it will be reviewed by a human',

|

||||

}

|

||||

},

|

||||

'required': ['task_description', 'programmatic_goal', 'user_review_goal'],

|

||||

},

|

||||

},

|

||||

},

|

||||

"required": ['plan'],

|

||||

},

|

||||

}],

|

||||

'functions': {

|

||||

'implement_development_plan': lambda plan: plan

|

||||

},

|

||||

}

|

||||

|

||||

EXECUTE_COMMANDS = {

|

||||

'definitions': [{

|

||||

'name': 'execute_commands',

|

||||

'description': f'Executes a list of commands. ',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'commands': {

|

||||

'type': 'array',

|

||||

'description': f'List of commands that need to be executed. Remember, if you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`',

|

||||

'items': command_definition(f'A single command that needs to be executed.', f'Timeout in milliseconds that represent the approximate time this command takes to finish. If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds.')

|

||||

}

|

||||

},

|

||||

'required': ['commands'],

|

||||

},

|

||||

}],

|

||||

'functions': {

|

||||

'execute_commands': lambda commands: commands

|

||||

}

|

||||

}

|

||||

|

||||

GET_FILES = {

|

||||

'definitions': [{

|

||||

'name': 'get_files',

|

||||

'description': f'Returns development files that are currently implemented so that they can be analized and so that changes can be appropriatelly made.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'files': {

|

||||

'type': 'array',

|

||||

'description': f'List of files that need to be analized to implement the reqired changes. Any file name in this array MUST be from the directory tree listed in the previous message.',

|

||||

'items': {

|

||||

'type': 'string',

|

||||

'description': f'A single file name that needs to be analized to implement the reqired changes. Remember, this is a file name with path relative to the project root. For example, if a file path is `{{project_root}}/models/model.py`, this value needs to be `models/model.py`. This file name MUST be listed in the directory from the previous message.',

|

||||

}

|

||||

}

|

||||

},

|

||||

'required': ['files'],

|

||||

},

|

||||

}],

|

||||

'functions': {

|

||||

'get_files': lambda files: files

|

||||

}

|

||||

}

|

||||

|

||||

IMPLEMENT_CHANGES = {

|

||||

'definitions': [{

|

||||

'name': 'save_files',

|

||||

'description': 'Iterates over the files passed to this function and saves them on the disk.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'files': {

|

||||

'type': 'array',

|

||||

'description': 'List of files that need to be saved.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'name': {

|

||||

'type': 'string',

|

||||

'description': 'Name of the file that needs to be saved on the disk.',

|

||||

},

|

||||

'path': {

|

||||

'type': 'string',

|

||||

'description': 'Path of the file that needs to be saved on the disk.',

|

||||

},

|

||||

'content': {

|

||||

'type': 'string',

|

||||

'description': 'Full content of the file that needs to be saved on the disk.',

|

||||

},

|

||||

'description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of the file that needs to be saved on the disk. This description doesn\'t need to explain what is being done currently in this task but rather what is the idea behind this file - what do we want to put in this file in the future. Write the description ONLY if this is the first time this file is being saved. If this file already exists on the disk, leave this field empty.',

|

||||

},

|

||||

},

|

||||

'required': ['name', 'path', 'content'],

|

||||

}

|

||||

}

|

||||

},

|

||||

'required': ['files'],

|

||||

},

|

||||

}],

|

||||

'functions': {

|

||||

'save_files': lambda files: files

|

||||

},

|

||||

'to_message': lambda files: [

|

||||

f'File `{file["name"]}` saved to the disk and currently looks like this:\n```\n{file["content"]}\n```' for file

|

||||

in files]

|

||||

}

|

||||

|

||||

GET_TEST_TYPE = {

|

||||

'definitions': [{

|

||||

'name': 'test_changes',

|

||||

'description': f'Tests the changes based on the test type.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'description': f'Type of a test that needs to be run. If this is just an intermediate step in getting a task done, put `no_test` as the type and we\'ll just go onto the next task without testing.',

|

||||

'enum': ['automated_test', 'command_test', 'manual_test', 'no_test']

|

||||

},

|

||||

'command': command_definition('Command that needs to be run to test the changes.', 'Timeout in milliseconds that represent the approximate time this command takes to finish. If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`'),

|

||||

'automated_test_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of an automated test that needs to be run to test the changes. This should be used only if the test type is "automated_test" and it should thoroughly describe what needs to be done to implement the automated test so that when someone looks at this test can know exactly what needs to be done to implement this automated test.',

|

||||

},

|

||||

'manual_test_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a manual test that needs to be run to test the changes. This should be used only if the test type is "manual_test".',

|

||||

}

|

||||

},

|

||||

'required': ['type'],

|

||||

},

|

||||

}],

|

||||

'functions': {

|

||||

'test_changes': lambda type, command=None, automated_test_description=None, manual_test_description=None: (

|

||||

type, command, automated_test_description, manual_test_description)

|

||||

}

|

||||

}

|

||||

|

||||

DEBUG_STEPS_BREAKDOWN = {

|

||||

'definitions': [

|

||||

{

|

||||

'name': 'start_debugging',

|

||||

'description': 'Starts the debugging process based on the list of steps that need to be done to debug the problem.',

|

||||

'parameters': {

|

||||

'type': 'object',

|

||||

"properties": {

|

||||

"steps": {

|

||||

'type': 'array',

|

||||

'description': 'List of steps that need to be done to debug the problem.',

|

||||

'items': {

|

||||

'type': 'object',

|

||||

'description': 'A single step that needs to be done to get closer to debugging this issue. Remember, if you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds. If you need to create a directory that doesn\'t exist and is not the root project directory, always create it by running a command `mkdir`',

|

||||

'properties': {

|

||||

'type': {

|

||||

'type': 'string',

|

||||

'enum': ['command', 'code_change', 'human_intervention'],

|

||||

'description': 'Type of the step that needs to be done to debug this issue.',

|

||||

},

|

||||

'command': command_definition('Command that needs to be run to debug this issue.', 'Timeout in milliseconds that represent the approximate time this command takes to finish. If you need to run a command that doesnt\'t finish by itself (eg. a command to run an app), put the timeout to 3000 milliseconds.'),

|

||||

'code_change_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a step in debugging this issue when there are code changes required. This should be used only if the task is of a type "code_change" and it should thoroughly describe what needs to be done to implement the code change for a single file - it cannot include changes for multiple files.',

|

||||

},

|

||||

'human_intervention_description': {

|

||||

'type': 'string',

|

||||

'description': 'Description of a step in debugging this issue when there is a human intervention needed. This should be used only if the task is of a type "human_intervention".',

|

||||

},

|

||||

"check_if_fixed": {

|

||||

'type': 'boolean',

|

||||

'description': 'Flag that indicates if the original command that triggered the error that\'s being debugged should be tried after this step to check if the error is fixed. If you think that the original command `delete node_modules/ && delete package-lock.json` will pass after this step, then this flag should be set to TRUE and if you think that the original command will still fail after this step, then this flag should be set to FALSE.',

|

||||

}

|

||||

},

|

||||

'required': ['type', 'check_if_fixed'],

|

||||

}

|

||||

}

|

||||

},

|

||||

"required": ['steps'],

|

||||

},

|

||||

},

|

||||

],

|

||||

'functions': {

|

||||

'start_debugging': lambda steps: steps

|

||||

},

|

||||

}

|

||||

5

pilot/const/llm.py

Normal file

5

pilot/const/llm.py

Normal file

@@ -0,0 +1,5 @@

|

||||

import os

|

||||

MAX_GPT_MODEL_TOKENS = int(os.getenv('MAX_TOKENS'))

|

||||

MIN_TOKENS_FOR_GPT_RESPONSE = 600

|

||||

MAX_QUESTIONS = 5

|

||||

END_RESPONSE = "EVERYTHING_CLEAR"

|

||||

0

pilot/database/__init__.py

Normal file

0

pilot/database/__init__.py

Normal file

8

pilot/database/config.py

Normal file

8

pilot/database/config.py

Normal file

@@ -0,0 +1,8 @@

|

||||

import os

|

||||

|

||||

DATABASE_TYPE = os.getenv("DATABASE_TYPE", "sqlite")

|

||||

DB_NAME = os.getenv("DB_NAME")

|

||||

DB_HOST = os.getenv("DB_HOST")

|

||||

DB_PORT = os.getenv("DB_PORT")

|

||||

DB_USER = os.getenv("DB_USER")

|

||||

DB_PASSWORD = os.getenv("DB_PASSWORD")

|

||||

22

pilot/database/connection/postgres.py

Normal file

22

pilot/database/connection/postgres.py

Normal file

@@ -0,0 +1,22 @@

|

||||

import psycopg2

|

||||

from peewee import PostgresqlDatabase

|

||||

from psycopg2.extensions import quote_ident

|

||||

from database.config import DB_NAME, DB_HOST, DB_PORT, DB_USER, DB_PASSWORD

|

||||

|

||||

def get_postgres_database():

|

||||

return PostgresqlDatabase(DB_NAME, user=DB_USER, password=DB_PASSWORD, host=DB_HOST, port=DB_PORT)

|

||||

|

||||

def create_postgres_database():

|

||||

conn = psycopg2.connect(

|

||||

dbname='postgres',

|

||||

user=DB_USER,

|

||||

password=DB_PASSWORD,

|

||||

host=DB_HOST,

|

||||

port=DB_PORT

|

||||

)

|

||||

conn.autocommit = True

|

||||

cursor = conn.cursor()

|

||||

safe_db_name = quote_ident(DB_NAME, conn)

|

||||

cursor.execute(f"CREATE DATABASE {safe_db_name}")

|

||||

cursor.close()

|

||||

conn.close()

|

||||

5

pilot/database/connection/sqlite.py

Normal file

5

pilot/database/connection/sqlite.py

Normal file

@@ -0,0 +1,5 @@

|

||||

from peewee import SqliteDatabase

|

||||

from database.config import DB_NAME

|

||||

|

||||

def get_sqlite_database():

|

||||

return SqliteDatabase(DB_NAME)

|

||||

441

pilot/database/database.py

Normal file

441

pilot/database/database.py

Normal file

@@ -0,0 +1,441 @@

|

||||

from playhouse.shortcuts import model_to_dict

|

||||

from peewee import *

|

||||

from termcolor import colored

|

||||

from functools import reduce

|

||||

import operator

|

||||

import psycopg2

|

||||

from const.common import PROMPT_DATA_TO_IGNORE

|

||||

from logger.logger import logger

|

||||

from psycopg2.extensions import quote_ident

|

||||

|

||||

from utils.utils import hash_data

|

||||

from database.config import DB_NAME, DB_HOST, DB_PORT, DB_USER, DB_PASSWORD, DATABASE_TYPE

|

||||

from database.models.components.base_models import database